DATASET AVAILABLE

SHREC'19 track

Matching Humans with Different Connectivity

Outline

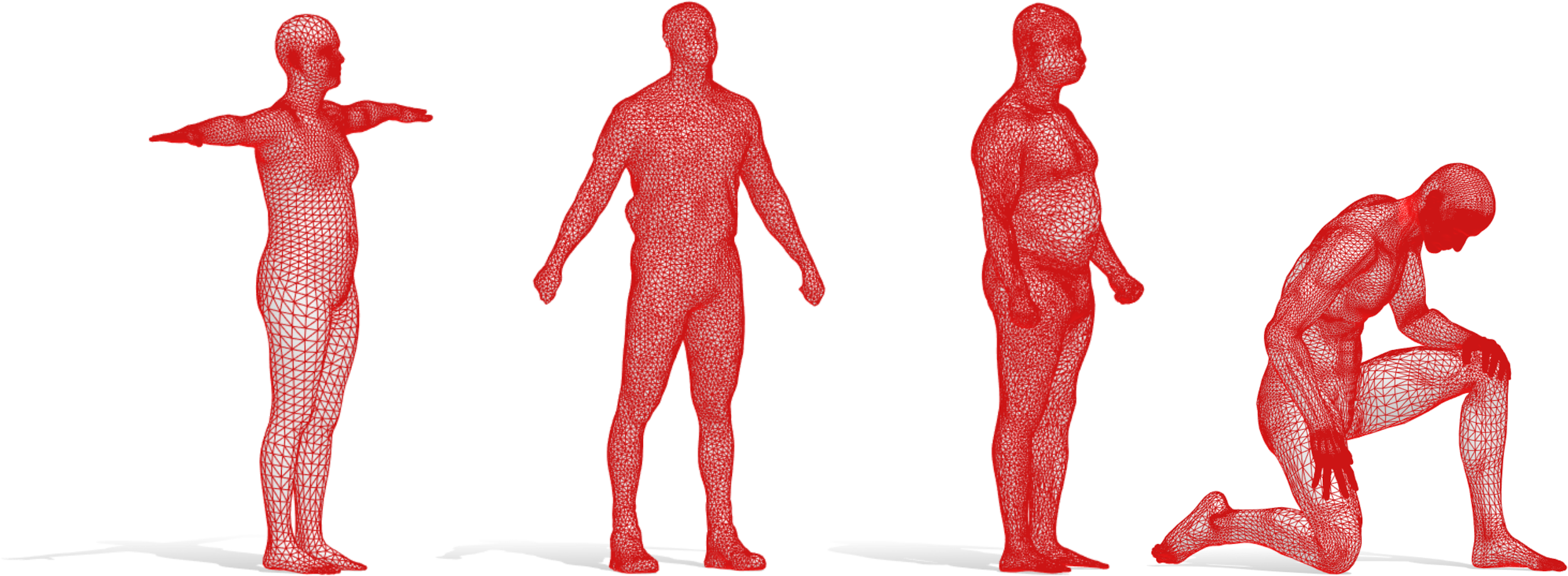

Shape matching plays an important role in geometry processing and shape analysis. In the last decades, much research has been devoted to improve the quality of matching between surfaces. This huge effort is motivated by several applications such as object retrieval, animation and information transfer just to name a few. Shape matching is usually divided into two main categories: rigid and non rigid matching. In both cases, the standard evaluation is usually performed on shapes that share the same connectivity, in other words, shapes represented by the same mesh. This is mainly due to the availability of a “natural” ground truth that is given for these shapes. Indeed, in most cases the consistent connectivity directly induces a ground truth correspondence between vertices. However, this standard practice obviously does not allow to estimate the robustness of a method with respect to different connectivity. With this track, we propose a benchmark to evaluate the performance of point-to-point matching pipelines when the shapes to be matched have different connectivity (see Figure 1). We consider the concurrent presence of 1) different meshing, 2) rigid transformation in 3D space, 3) non-rigid deformations, 4) different vertex density, ranging from 5K to more than 50K, and 5) topological changes induced by mesh gluing in areas of contact. The correspondence between these shapes is obtained through the recently proposed registration pipeline FARM [1]. This method provides a high-quality registration of the SMPL model [2] to a large set of human meshes coming from different datasets from which we obtain a well-defined correspondence for all the meshes registered and SMPL itself.Utility

Although recent publications in the field of shape analysis achieve high-quality results in point-to-point matching, their quantitative evaluations have been performed only on meshes that share the connectivity (e.g. the widely used FAUST dataset [3]). The unique quantitative evaluation of the robustness to different density and distribution of the vertices is performed remeshing independently the shape as done in [4]. Otherwise, this robustness is usually not evaluated. This lack suggests that the community needs a new benchmark where robustness to this kind of nuisance can be assessed. We believe that by constructing a new, large, specific and challenging dataset of human bodies discretized with different density and mesh connectivity, we will provide a valuable testbed and hopefully foster further interest of the community in this more realistic scenario.Benchmark

The complete dataset consists in hundreds of shape pairs composed by meshes that represent deformable human body shapes. Shapes belonging to these categories undergo changes in pose and identity. The meshes exhibit variations of two different types: Density (from 5K to 50K vertices). Distribution (uniform and non-uniform). There will be three different settings: SMPL-to-all, all-to-SMPL, and all-to-all. For each of these cases we will consider two different competitions: one for descriptors repeatability, and one for dense correspondence pipelines. The dataset is composed by the collection of all the shapes involved in the evaluation (for each of which we will notify the original dataset to which it belongs). Then we will provide the list of the pairs that will be involved in the evaluation.DATASET

Download the dataset:> DOWNLOAD <

A briefly overview of included data: metadata

Challenge pairs: pairs

Provided to submitters

- A collection of meshes with different connectivity, subject and pose in .obj format with an ordinary number as name (e.g 35.obj).

- For every mesh the dataset to which it belongs, the original name, and some properties. (metadata)

- A list of pairs for which the estimated correspondences are requested for submitting and for participating to the track (e.g. 35,28). (pairs)

Requested for submitters

- For each pair, a file in .txt format with the same name of the pair (e.g 35,28.txt) containing the correspondence. An example of the expected file can be downloaded here .

- Here can be found a MATLAB script to generate the txt file example.

- The requested correspondence is vertex-to-vertex. If the number of vertices of the pair A_B are A.nv and B.nv respectively, in the txt file we are expecting to find a ordered list of A.nv indices. These indices belong to the interval [1:B.nv]. The i-th index of this list is the index of the vertex of B that corresponds to the i-th vertex of A.

- A brief description of the method (including average offline preprocessing and per-query runtime), with the references needed for the description.

- For each method should be clarified if it must be considered as a point descriptors or as a Matching Pipelines.

- The folder compressed in a zip file and the description of the method should be sent to simone.melzi@univr.it. We will reply at every received submission with a confirmation e-mail. Deadline for submission is March 3rd 2019 at 23:59 UTC / GMT.

Submission

Participants are required to submit for each pair of shapes (M,N) a vector of indices, with length equal to the number of vertices of M. The i-th entry of this vector corresponds to the index of the estimated corresponding vertex on N. The result of each pair must be saved with the name of the corresponding pair. The results of a different algorithm or a different parameter setting must be submitted as a unique folder containing all the vectors for all the pairs of the challenge. Up to 3 parametrizations are allowed for each participating method. Average offline preprocessing and per-query runtimes should also be submitted.

Evaluation

Each method will be evaluated adopting the de-facto standard evaluation proposed by Kim et al. [5] taking as ground truth the matches provided by FARM registration pipeline.

Evaluation Code

Evaluation code and ground truth correspondences have been released!GitHub

References

[1]: FARM: Functional automatic registration method for 3D human bodies, Marin et al, arxiv 2018 (actually under review).[2]: SMPL: A skinned multi-person linear model, Loper et al, TOG 34(6), 2015.

[3]: FAUST: Dataset and Evaluation for 3d Mesh Registration, Bogo et al, CVPR 2014.

[4]: Continuous and Orientation-preserving Correspondences via Functional Maps, Jing Ren et al, SIGGRAPH ASIA 2018.

[5]: Blended intrinsic maps, Kim et al, TOG 30(4), 2011.

News

18th, May

Evaluation code and ground truth correspodence released!

6th, May

Presented in Genova 3DOR Workshop! Thank you all!

25th, February

Fixed MATLAB script to generate example txt: use dlmwrite() instead of csvwrite() to prevent precision loss.

15th, February

Data available.

Deadline: March 3rd 2019 at 23:59 UTC / GMT.

6th, February

Website deployed.

5th, February

Track Accepted!

Contact

Simone Melzisimone.melzi@univr.it

Organizers

Simone Melzi(University of Verona, Italy)

simone.melzi@univr.it

https://sites.google.com/site/melzismn/

Riccardo Marin

(University of Verona, Italy)

riccardo.marin_01@univr.it

http://profs.scienze.univr.it/~marin/

Emanuele Rodolà

(Sapienza University of Rome, Italy)

rodola@di.uniroma1.it

http://sites.google.com/site/erodola/

Umberto castellani

(University of Verona, Italy)

umberto.castellani@univr.it

http://profs.sci.univr.it/~castella